.jpg)

Schema-First Design for Automation

We design AI systems that turn messy human input into structured insight—reliably and at scale. Drawing from real-world applications in generative AI and predictive modeling, this blog post explores a schema-first approach to automation, enabling a seamless data-to-insight AI pipeline. We’ll unpack the architecture behind AI systems that translate natural language into actionable data, with lessons on reducing manual effort, increasing adoption, and future-proofing workflows. Whether you're building internal tools or client-facing products, you'll leave with a clear framework for human-to-machine translation that makes predictive AI usable.

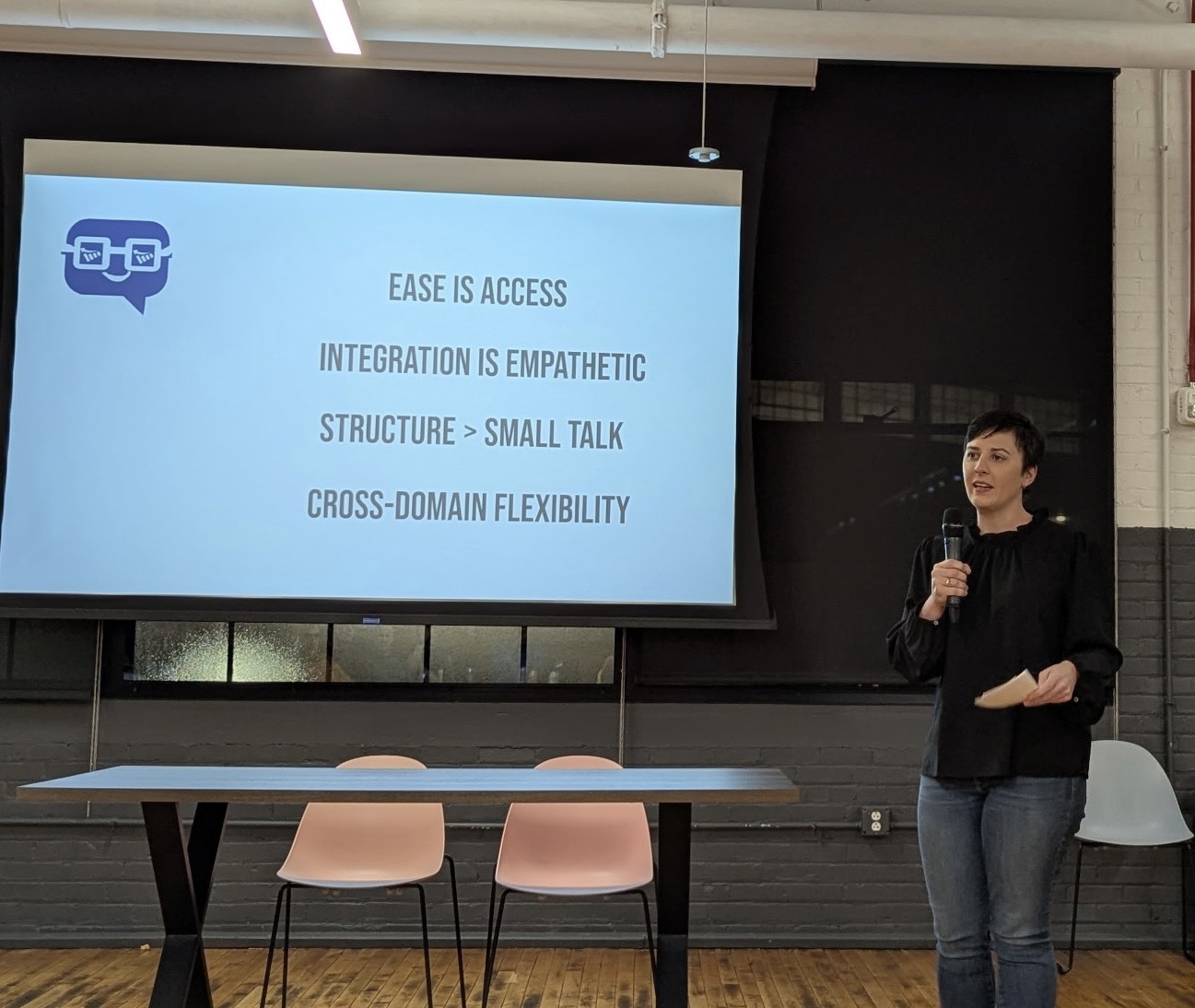

Schema-First Design for Automation: Building AI Systems that Translate Human Input into Action

By Emily Smith, Founder of Side Nerd Apps

When I was a kid, I loved math and hated Spanish. My notebooks were covered with doodles of "quiero morir" in stylized fonts. Ironically, these days I use my terrible Spanish far more than my (formerly solid) calculus. My career has become all about translation—but not between languages. Between people, data, and machines.

At Side Nerd, we build AI systems that translate unstructured, messy input into structured schema—so humans and software can act on it. Whether it's logging volunteer hours, capturing Medicare leads, or reporting benefits questions, our system helps automate translations that humans used to do with significant mental effort.

From Analyst to Architect

My journey began at Analytic Partners, a media mix modeling (MMM) firm. There, I learned to:

- Extract weekly media spend from broken spreadsheets or PDFs.

- Load and test statistical models.

- Translate results into insights and client recommendations.

Each step was a translation:

- Human → Schema: Normalize data into format (e.g., impressions by channel by week).

- Schema → Insight: Run models to identify incremental revenue and ROI.

- Insight → Decision: Make recommendations.

- Decision → Action: Present data in a way clients can act on.

This is the foundation of a data-to-insight AI pipeline—and each translation step can be misunderstood, ignored, or automated.

Translation Architecture in the Real World

My brother’s a doctor, and his workflow is nearly identical:

- Collect symptoms

- Structure into vitals, labs, exam notes

- Compare against norms to diagnose

- Prescribe treatment

Different domain. Same schema-first thinking.

At Side Nerd, I designed a system to help nonprofits and businesses collect critical information via text. Why? Because most volunteers and field workers hate portals and apps. But they will text. So we built a schema-first automation framework that maps text to structured logs.

This work—and previous roles—led me to embrace a layered model of translation inspired by Parasuraman’s 4 Stages of Automation (2000). For each step, we ask: What can we automate? How do we make it safe, accurate, and actionable?

Three Principles of Schema-First Automation

1. Add Structure to Complex Logic

Focus: Human → Schema

LLMs are powerful translators. But users don’t speak in schemas, and structured data is essential for AI systems that drive workflow automation.

Our approach at Side Nerd combines prompt engineering and fine-tuning to:

- Parse natural language into structured fields

- Ask fallback questions when information is missing

- Normalize edge cases like "loved your last message"

Our Hierarchical Data Model

We use a shared model across all use cases:

- User Intent: What is the user trying to do?

- Structured Fields: Data needed for analytics or workflow

- Special Requests: Feedback, questions, clarifications

- Contextual Updates: Refers to prior entries

This schema-first design for automation is what allows our platform to scale across domains—because the schema guides the model, not just the prompt.

Why Fine-Tune?

While prompting with examples is faster, it lacks resilience. Fine-tuning embeds expertise directly into the model—like hiring a trained specialist instead of relying on good instructions.

Prompting is Hubspot. Fine-tuning is Salesforce. Prompting is Google Sheets. Fine-tuning is BigQuery.

Fine-tuning helps us:

- Reduce latency

- Improve consistency

- Support branching logic across clients

2. Wrap Insights in Metadata and Validation

Focus: Schema → Insight

An AI-generated insight is meaningless if it isn’t traceable or trusted. We wrap each one in metadata:

- Model version

- Timestamp

- Confidence level

- Triggered rules or flags

- Audit trail

This makes our system auditable and safe—especially in high-stakes domains like Medicare or HR.

Insight-Metadata Example (MMM)

{

"insight_id": "insight_2025q4_3419",

"generated_at": "2025-10-21T18:03:45Z",

"model_version": "mmm_v4.5.1",

"model_type": "Bayesian Ridge Regression",

"training_data_range": {

"start_date": "2024-10-01",

"end_date": "2025-09-30"

},

"prediction_type": "ROI Forecast Delta",

"prediction_value": -0.067,

"units": "return_on_ad_spend_delta",

"confidence_interval_95": {

"lower": -0.121,

"upper": -0.012

},

"baseline_reference": 0.22,

"affected_channel": "Meta Ads",

"triggered_flags": [

"delta_exceeds_25%",

"active_campaign=True"

],

"audit_log_url": "https://side-nerd.com/audit/insight_2025q4_3419",

"requires_human_review": true,

"related_insights": [

{

"insight_id": "insight_2025q4_2981",

"date": "2025-09-28",

"delta": -0.031,

"same_channel": true,

"reviewed_by": "judge_model",

"status": "approved"

},

{

"insight_id": "insight_2025q3_2210",

"date": "2025-08-20",

"delta": -0.052,

"status": "escalated"

}

],

"trend_over_time": {

"channel": "Meta Ads",

"last_4_weeks": [-0.01, -0.03, -0.05, -0.067],

"slope": -0.018

}

}We also include context snapshots for each insight:

- Active campaigns

- Media spend shifts

- Tracking reliability

- News or external events

📦 Example: context_snapshot.json for MMM

{

"insight_id": "insight_2025q4_3419",

"active_campaigns": [

"Fall TV Push",

"Instagram UGC Refresh"

],

"media_spend_changes": {

"Meta Ads": {

"previous_week": 42000,

"current_week": 72000,

"percent_change": 71.4

}

},

"pricing_change_event": {

"product_line": "Wellness Supplements",

"type": "Discount Increase",

"change_percent": -20,

"effective_date": "2025-10-15"

},

"tracking_integrity": {

"utm_params": "clean",

"offline_channel_lag_days": 5,

"clickstream_data_coverage": 0.92,

"media_cost_fill_rate": 1.0

},

"external_events": [

{

"type": "NewsEvent",

"description": "New FDA guidance on supplement labeling",

"impact_level": "moderate",

"date": "2025-10-17"

}

],

"data_snapshot_completeness": {

"clickstream": 0.92,

"sales_crm": 0.88,

"media_costs": 1.0

}

}This gives reviewers or judge models the information they need to interpret and trust the AI’s recommendations.

3. Make It Callable and Usable

Focus: Insight → Decision → Action

Even great models fail when outputs aren’t integrated. Our AI platform ensures:

- Insights are exposed via API or webhook

- Outputs match decision-makers’ mental models

- Triggers lead to useful action (e.g., personalized SMS follow-ups)

We use rule-based logic where appropriate, and let LLMs assist, not dominate, system behavior.

From Prompt to Platform

What began with "Can we extract hours from a text?" became a platform that handles:

All with schema-first AI systems that translate human input into structured action.

Being a translation architect means going beyond prompts. It means designing systems that guide the entire journey—from unstructured language to clear, auditable, useful outcomes.

Want to connect or collaborate? Follow me on LinkedIn or send an email to emily@sidenerdapps.com